Credit: Rita El Khoury / Android Authority

It’s a known fact that ChatGPT, Gemini, and most AI chatbots hallucinate answers sometimes. They make up things out of thin air, lie to please you, and contort their answers the moment you challenge them. Although those are becoming more rare instances, they still happen, and they completely ruin trust. If I never know when Gemini is saying the truth and when it’s lying to me, what’s the point of even using it?

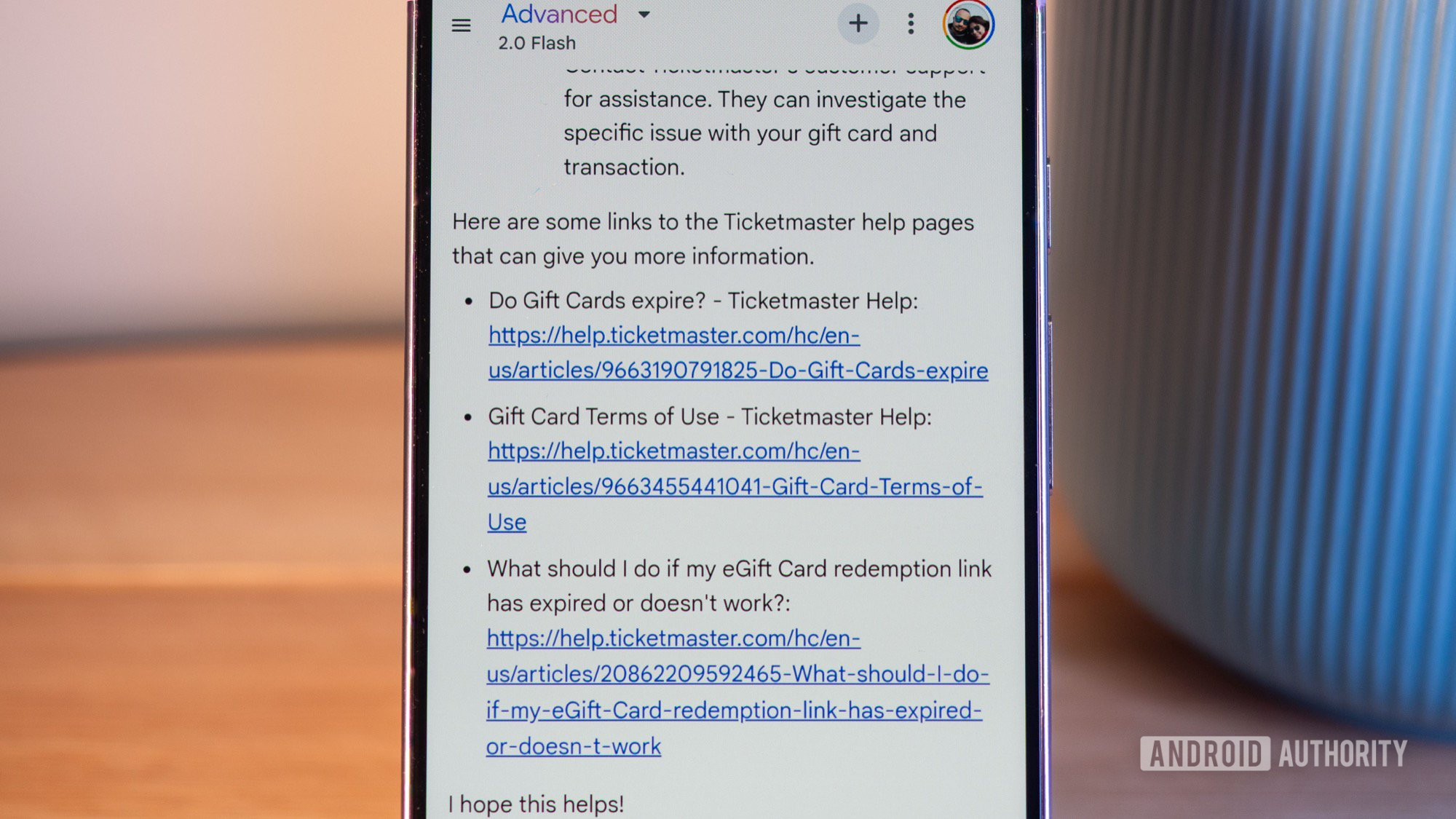

That’s why I mostly gravitate towards Perplexity for my search queries. I like seeing the sources in front of me and being able to click to read more from trustworthy sites. Then it occurred to me: There’s a way I can make Gemini behave more like Perplexity, and all it takes is a single sentence!

More...